One of the most amazing features enabled by machine learning algorithms is the ability to issue text searches on photos and images. Google Photos’s search abilities are one of its headline features. And Apple, too, has been working to improve search in the Photos app that comes with iPhones and other iOS devices.

As an iOS user, I’ve been watching Photos’s search functionality improve over the last couple of years. Although it’s a bit slower than I’d prefer, it’s still very useful. I can search by dates and common terms (e.g. “Halloween”) and often find what I’m looking for. However, sometimes the search yields no results at all — even when the term I’m searching for is a common word.

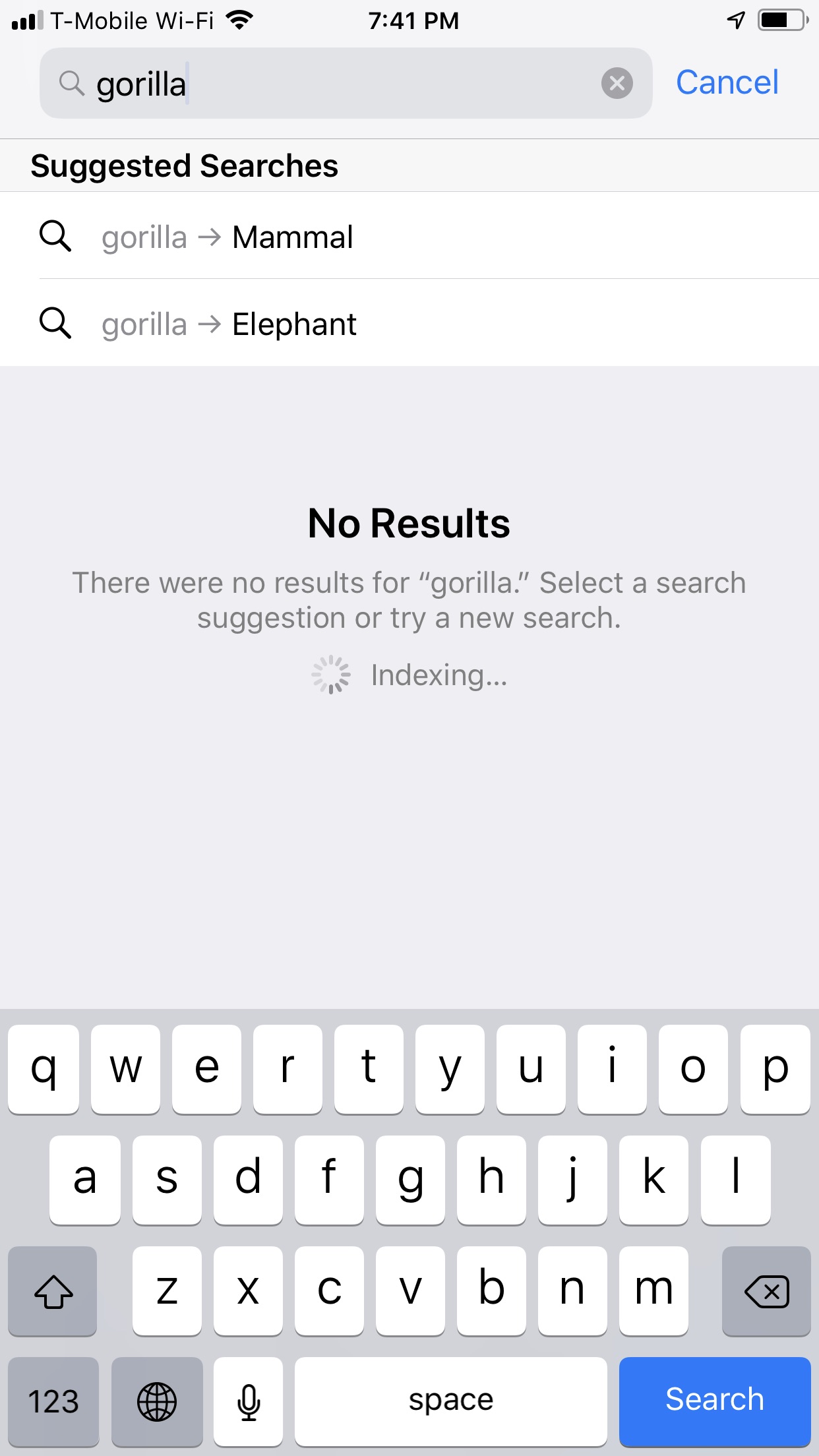

Recently I noticed a change in Photos’s search results UI that makes its operation more transparent:

What’s going on here? I’ve typed the word gorilla into the search box and Photos finds no results. (Yes, I do have photos of gorillas in my collection.) Rather than leave me with nothing, Photos offers to broaden the scope of the search. I’m offered two alternate searches:

gorilla → Mammal gorilla → Elephant

There’s clearly some term mapping happening behind the scenes. That’s not unusual for search systems. What’s intriguing is how Apple has represented the mapping of terms, with the arrow pointing from my original search term to the suggested alternatives. Neither alternative is very useful to me in this particular case, but I understand why the system is suggesting these terms. (“Mammal” is a broader category of which gorillas are a member, and “elephant” is a sibling in that group.) That said, I appreciate the ability to change the scope of the search with one tap and the compact clarity of this UI.