There’s a lot of chatter about artificial intelligence. Much of it stays at a high level: AI is either cast as a magic savior that will free us from all tedium and generate unimaginable riches or as an evil force that will lead to mass unemployment and possibly destroy the world.

As always, I consider both extremes to be off the mark. Rather than dwell on platitudes, I’ve been experimenting with how AI might help with my work in information architecture. In this post, I’ll share an early experiment.

The problem I’m trying to solve

The object of the experiment is my blog. I started that site over twenty years ago in part to have a test bed for new technologies and techniques – i.e., things like AI. The blog now has 1,125 posts: smaller than many but large enough to accommodate experiments that require scale.

The problem I’m trying to solve is one of them. When I migrated the site to Jekyll a few years ago, I lost the ability to present related (e.g., “see also”) articles in each post. This affects bounce rates and time spent on the site. More importantly, it hides valuable content that might help my readers.

It’s not fair to say I lost this ability. Jekyll has a built-in feature that provides this capability to a degree: adding site.related_posts to a Jekyll template returns a list of related posts.

The problem is with what ‘related’ means. By default, the feature returns only the ten most recent posts, which isn’t very useful. It also provides a command to invoke latent semantic indexing, which promises better results. Alas, it also slows down site rendering times.

Webjeda shared a technique to make the default related_posts behavior produce more relevant results without the overhead:

A possible solution was to look for posts with matching category or tag. This will make sure the related posts are actually related. To make it even better we are comparing more categories or tags. If they match then those posts will show up.

This approach does the job – with some caveats. Let me explain how it works. As with other content management systems, Jekyll posts can have tags and categories. This approach identifies posts that share n number of tags or categories in common.

So, for example, if n = 2, Jekyll will identify posts that include the same two tags or categories. One post could have four tags and another three, sharing two in common. Results would include both posts in that case.

n can be any positive integer. The higher the number, the more concordance there will be between posts. So, n = 4 produces more accurate results than n = 2. This improved accuracy comes at the expense of a bit of rendering speed. But a bigger problem is that posts with fewer than n tags aren’t considered part of the set.

As you may imagine, over twenty-plus years, I haven’t always been disciplined about tagging posts with at least n tags. For example, some early posts only have one tag, which makes them invisible from the Webjeda approach.

Since migrating to Jekyll, I’ve considered reviewing all the posts on the site to ensure they all have a minimum of three tags to enable this concordance filter. The obvious problem is time: manually indexing 1,000-plus posts isn’t exactly a “rainy day” type of project. Given my work and teaching commitments, I haven’t prioritized this change.

There are probably Jekyll existing plugins that do what I want. And the system’s built in semantic indexing functionality might also do the trick. But again, part of why I keep a blog is so that I can play around with interesting new technologies. So, let’s see how LLMs can help with this issue.

How an LLM can help

To recap: I have over one thousand text files that I’d like to tag with a minimum of three tags each. This is precisely the type of problem that LLMs can help with. But how? Copying and pasting post content into a chat interface won’t scale for >1k files.

On the other hand, command line tools are perfect for batch text manipulation at scale. I am using two such tools written by Simon Willison: ttok and llm.

The first tool, ttok truncates the text to a number of tokens the LLM can work with. I won’t get into what that means here, but it keeps things from going off the rails.

The second tool, llm, is where the magic happens. As you might guess from its name, it lets you interact with various LLMs in a command line environment. (You can download models to use offline or call OpenAI’s API, which is what I’m doing.)

Using llm, you can interact with the model like you do via a chat interface but use pipes instead to feed it text and work with its output. That sentence may read like gobbledygook if you’re unfamiliar with the command line. An example may help.

Imagine you want the LLM to summarize the contents of a text file called essay.md. You’d write the following command in your terminal app:

cat essay.md | ttok -t 4000 | llm -s "summarize this essay"

This line invokes three tools in sequence: cat, ttok, and llm. The “pipe” (|) character between them feeds the output of one tool to the following tool in the chain. So the output from cat serves as the input to ttok, whose output is then fed into llm.

Here’s what each of them does:

catreads the contents of the fileessay.txtand sends it to standard output (that is, to the command line or the next tool in the chain)ttoktruncates the incoming text after 4,000 tokens (without modifying the file itself)llmprocesses the truncated text with the prompt to summarize the essay (the-sswitch sets a system prompt sollmcan process the piped input)

When you execute the sequence of commands, the output from llm is sent to standard out. From there, you can copy it, save it to a new text file, append it to an existing file, pipe it to other text-processing tools, or simply read the result.

The neat thing about this approach is that the prompt is entirely open-ended. You can ask the LLM to summarize the essay, translate it to another language, rewrite it as a sonnet, or what have you. In our case, we want to know what tags apply to particular blog posts.

A simple LLM categorizer

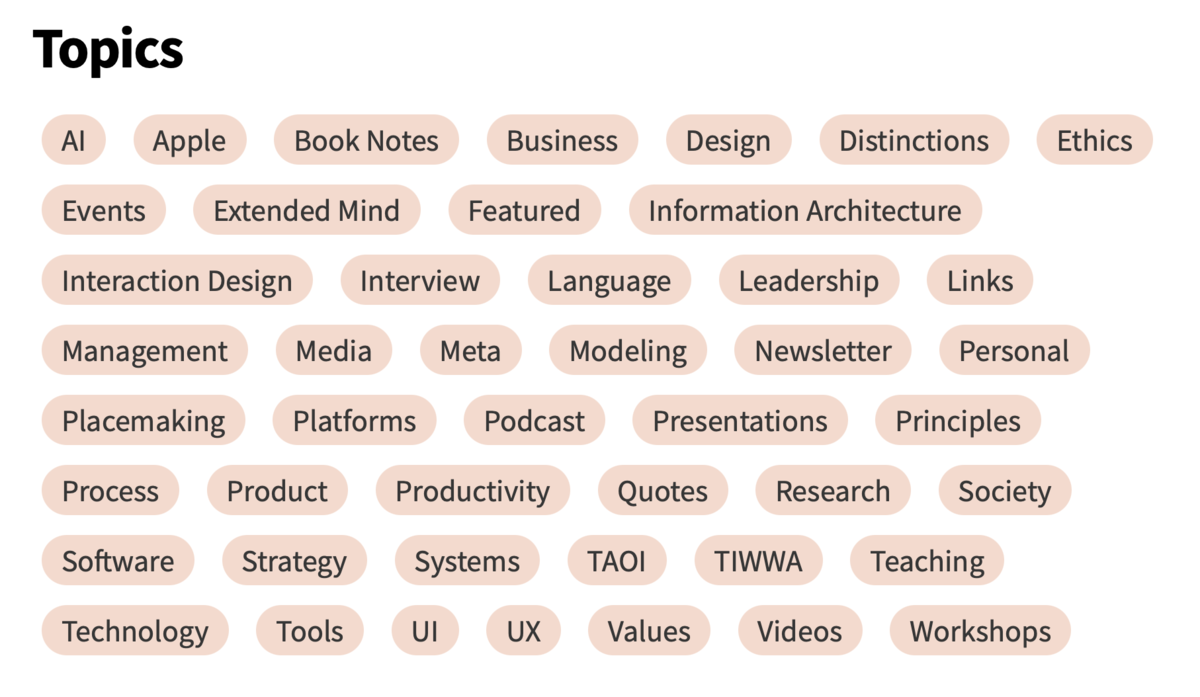

So, let’s get into how I’m using it to tag posts. The first thing you should know is that we’re not starting from scratch. The site already has a set of tags. You can see them in the Archives page:

But I won’t use the list as-is. These tags aren’t all the same. Some are common terms, such as Design, Research, and Tools; I assume the LLM will know what to do with these. But there are a couple of acronyms (TAOI and TIWWA) that the LLM won’t recognize.

Also, I’ve sometimes used tags to describe the content of a post (e.g., Information Architecture) and sometimes to describe its container (e.g., Presentations). I want the LLM to focus on content, so I’m excluding container tags and acronyms from the prompt.

Here’s the prompt I’m passing to llm:

cat essay.md | ttok -t 4000 | llm -s "categorize using three tags from this list: AI, Apple, Business, Design, Distinctions, Ethics, Events, Extended Mind, Information Architecture, Interaction Design, Language, Leadership, Management, Media, Meta, Modeling, Placemaking, Platforms, Podcast, Principles, Process, Product, Productivity, Research, Society, Software, Strategy, Systems, Teaching, Technology, Tools, UI, UX, Value"

When I execute this sequence of commands, the LLM finds the three tags from this list that best describe the file’s text. After a few seconds, it responds with something like:

Categories: Process, Systems, Tools

Transforming output to the right format

This output, in itself, is useful. I can modify the chain of commands to run the prompt on each of my website’s Markdown files one by one and get a list of recommended tags for all posts. However, I’d like the output in a format I can directly save to each post.

In Jekyll, Markdown post tags are stored in YAML front matter at the beginning of files. They look like this:

---

layout: post

title: 'Clarify Your Thinking by Drawing Concept Maps'

tags:

- Systems

- Process

- Tools

---

I want the list of categories rendered as a YAML outline, as seen in the example above. I could parse the Categories: ... output and transform it using other command line tools such as sed or perl. But we’ve got an AI, so why not let it do the transformation for us? It’s easy: just pipe the output from the LLM call to another LLM call, like so:

cat essay.md | ttok -t 4000 | llm -s "categorize using three tags from this list: AI, Apple, Business, Design, Distinctions, Ethics, Events, Extended Mind, Information Architecture, Interaction Design, Language, Leadership, Management, Media, Meta, Modeling, Placemaking, Platforms, Podcast, Principles, Process, Product, Productivity, Research, Society, Software, Strategy, Systems, Teaching, Technology, Tools, UI, UX, Value" | llm -s "convert the list to YAML bullets"

The AI now replies with:

- Process

- Systems

- Tools

We can copy-paste this directly into the file and save it, and we’re good to go.

Taking it to the next level

Well, not quite. Repeating this process 1,125 times would take a long time, even if I’m not reading and categorizing each post myself. So, I’m also experimenting with ways to modify each file automatically. There’s a command line tool called yq that parses and modifies YAML directly in each file. But I won’t get into that here.

Suffice it to say, this technique isn’t limited to tagging blog posts. LLMs are very powerful tools. I’ve also experimented with having them write short excerpts for each post and generate new cover images using DALL-E 3. And since I keep my notes in Obsidian, which also stores data in plain text Markdown files, I can use similar techniques on my personal content.

By the way, this experiment has led me to reconsider the architecture of my public tags. If the LLM doesn’t know what I mean by TAOI or TIWWA, users likely won’t know either. So, those should probably go away. But I’ll leave rearchitecting the site to another time; today, I’m just focused on tagging posts with existing tags.

On that note, I’m also exploring how to use these tools to help me rearchitect the site – i.e., not just tagging posts with a set of existing categories but suggesting new categories that might be relevant and understandable considering the whole set of posts and not just one at a time. At that point, the AI becomes the IA and my role becomes architecting the architect.

A version of this post first appeared in my newsletter. Subscribe to receive posts like this in your inbox every other Sunday.