A version of this post first appeared in my newsletter. Subscribe to receive posts like this in your inbox every other Sunday.

It’s not often that we see the introduction of a new world-changing computing paradigm. And yet, here we are. After years of rumors and speculation, Apple finally announced Vision Pro, their “spatial computing” platform. If you haven’t yet seen the introductory video, please take ten minutes to do so.

Vision Pro is exciting not because of what it can do now (there’s no obvious “killer app” yet) but because it promises a more direct way of interacting with information. The transitions from flipping switches to command lines, then to graphical UIs, and then touchscreens, were about removing layers of abstraction between users and information. Vision Pro removes one more layer: you now simply gaze at stuff.

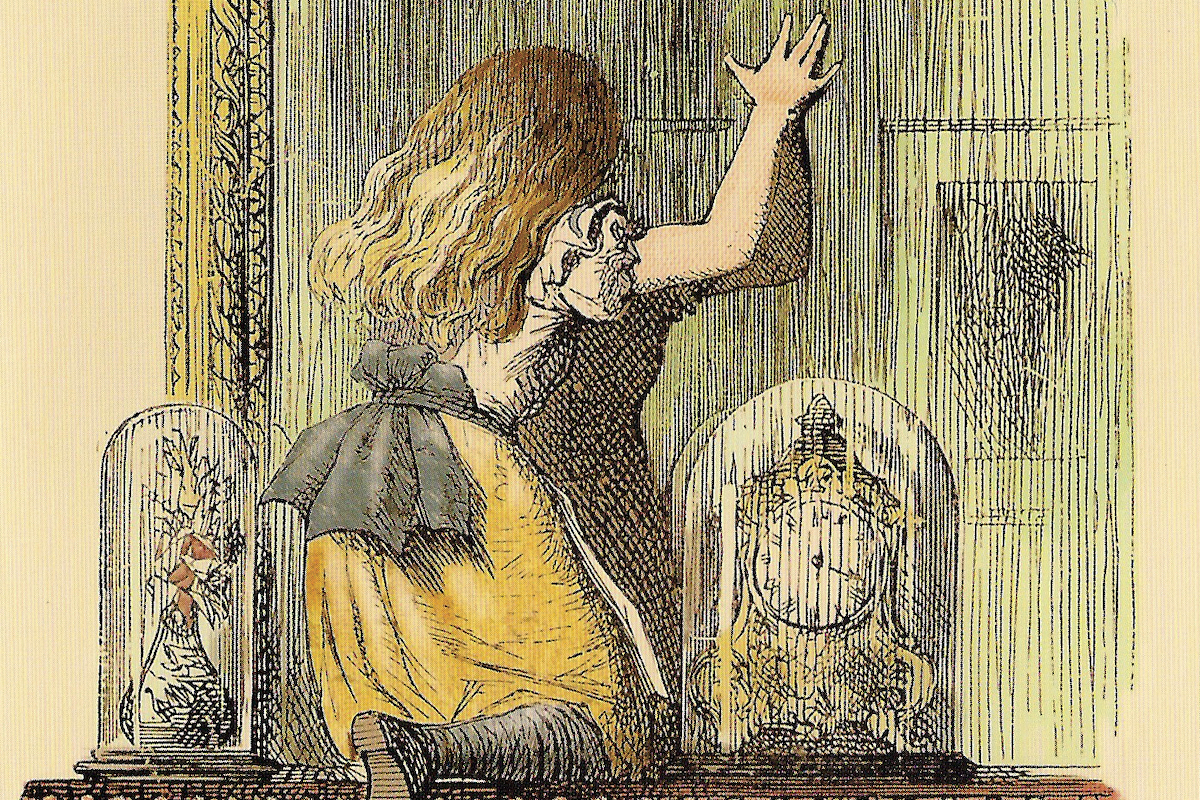

Moreover, the platform inverts the relationship between users and information. Early computers were room-sized devices you walked into to manipulate a few bits using the machine’s binary language. Which is to say, the computer was an imposing “other”; an alien thing with high barriers to entry.

With Vision Pro, the computer disappears as you immerse yourself into a synthetic experience that intercepts and alters your sense of sight and partially alters your sense of hearing. As Tim Cook said, it’s a device “you look through and not at.” You’re no longer interacting with information “out there” — you’re in the information space.

Cook also said that “blending digital content with the real world can unlock experiences like nothing we’ve never seen.” While that may be true in the long run, both Andy Matuschak and The Economist noted that the introduction highlighted relatively conservative use cases. Most involve doing what we do today with computers: manipulating information in 2-D rectangular windows. The difference is that now we see them in “real” space rather than inside rectangular screens.

The framing seems intentional. (Pardon the pun.) After all, compelling “native” use cases for AR and VR have existed for decades. 3-D gaming is perhaps the most obvious. But Apple avoided these in favor of showcasing better versions of productivity, communication, (passive) entertainment, and photo management tasks. The video describes the visionOS experience as “familiar, yet groundbreaking.”

Back when Steve Jobs introduced the iPhone, he framed it as a revolutionary phone, music player, and internet communicator. While the first-gen device had a camera, it had anemic photographic capabilities. And yet, today, people use their phones to take pictures more often than make phone calls. But the iPhone launched at a time when people carried cell phones to make calls, so showcasing that use case made it more immediately relevant.

I’m reminded of Wurman’s dictum that you only understand something relative to something you already understand. It’s much easier to project yourself into a new experience if it has use cases you grok and value now — even if those use cases aren’t what ultimately sets the experience apart.

Which is to say, exciting as it is, the Vision Pro’s launch video only hints at what’s coming. Placing spreadsheets around you in virtual space might seem useful now, but entirely new forms of interaction will emerge that make spreadsheets seem quaint. Adoption will spur developers to create those experiences, which will drive further adoption. Baby steps first.

Vision Pro exemplifies Apple’s MO: take clunky early-stage technologies and turn them into useful and desirable products. Apple didn’t invent the tech underlying the Apple II, Mac, iPod, iPhone, Watch; they merely turned them into things non-alpha geeks wanted. Vision Pro will do the same for AR and VR. Combined with AI, these immersive computing experiences will fundamentally change how we interact with information. I’m here for it.