As more things become digital, those of us who design digital things — apps, websites, software — increasingly define how people understand and interact with the world. It’s not uncommon for digital designers to make difficult choices on behalf of others. This requires an ethical commitment to doing the right thing.

For information architects, the critical decisions involve structuring information in particular ways. Choices include:

-

What information should be present

-

How information should be presented (i.e., in what format or sequence)

-

How information should be categorized

The objective is to make information easier to find and understand.

At least in theory. Often, the objective is to make some information easier to find than others. For example, it recently came to light that tax filing software makers such as Intuit and H&R Block set out to steer customers away from their free offerings. Intuit even tweaked its site, apparently to keep public search engines from indexing the product. The goal in this case seems to be not to make information more findable, but less so — while still technically complying with a commitment to “inform.”

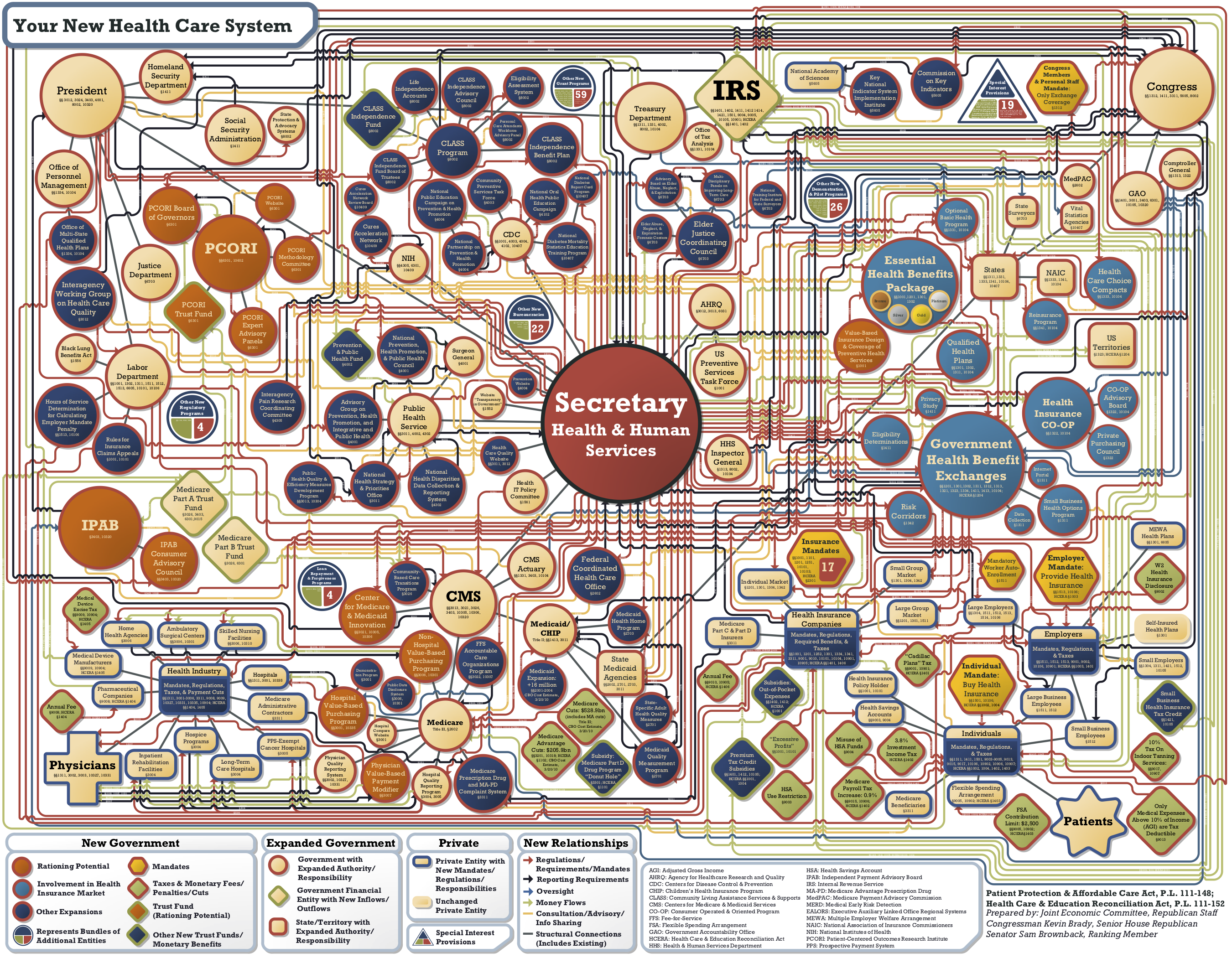

The same is true for understandability. A few years ago, when the Affordable Care Act was being debated in the U.S., a diagram was put forth that purported to explain the implications of the new law:

This is not a neutral artifact. Its primary design objective isn’t to make the ACA more understandable, but to highlight its complexity. (It succeeds.) This diagram intentionally confuses the viewer. As such, it’s ethically compromised.

IA challenges fall on a continuum. On one end of the spectrum, you’re aiming to inform the people who interact with your artifact about a particular domain. On the other end, you’re trying to persuade them.

By “inform,” I mean giving people the information they need so they can make reasonable decisions within a conceptual domain, and presenting this information to them in ways they can understand given their level of expertise. By “persuade,” I mean giving people the information they need so they can behave how we want them to, and presenting it to them in ways that nudge them in that direction.

Informing and persuading are different objectives. In one, you’re setting out to increase the person’s knowledge so they can make their own decisions. In the other, you’re setting out move them towards specific, predetermined outcomes. In both cases, you’re trying to alter behavior — but the motives are different. By informing, you make people smarter. By persuading, you make them acquiescent.

I’m not judging by observing this distinction. If someone is engaged in self-defeating or otherwise destructive courses of action (e.g., smoking, gambling, driving while intoxicated), setting out to change their behavior could be the compassionate, ethical thing to do. So persuasion isn’t bad per se. Also, few projects fall on either extreme in the continuum; most lie somewhere in the middle. (Is it ever possible to not persuade when structuring information? I.e., all taxonomies are political. Even this post is an exercise in persuasion.)

That said, if your goal is to make information more findable and understandable, you will sometimes be tested by the need to persuade. If the offering truly adds value to clients and to the world, and aligns with your own values, you’re unlikely to face a tough ethical call. Such offerings “sell themselves” — i.e., the more you know about them and their competitors, the more desirable they become. The problem comes when you’re asked to sell a lemon or to nudge people towards goals that are misaligned with their goals, your goals, or society’s goals. There’s no ethical way to bring balance to such situations; often the appropriate response is to take a “hard pass.” (I.e., not engage in the work at all.)