Last year, I published LLMapper, a tool that uses LLMs to draw concept maps from Wikipedia pages. Last week, I released a new version that gains new abilities by integrating more closely with Claude.

Here’s a sample concept map drawn using the Skill:

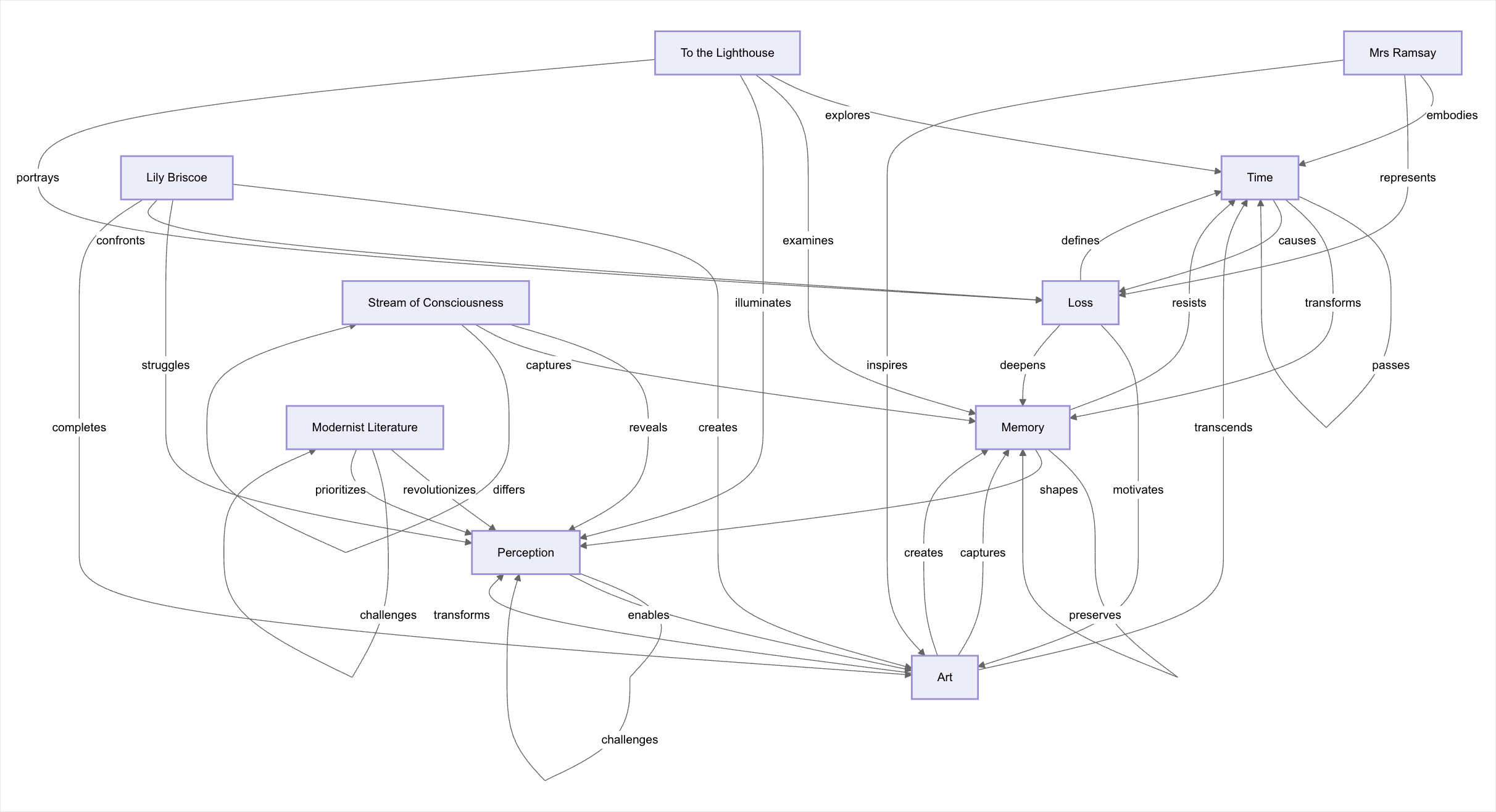

An AI-generated concept map of Virginia Woolf’s To the Lighthouse

A bit of background: A concept map is a diagram that shows relationships between concepts. It consists of nodes that represent ideas and labeled arrows that represent relationships. Seeing these conceptual distinctions and relationships laid out helps visual thinkers understand complex ideas quickly.

Back in 2024, AI struggled with concept maps. You could ask the multi-modal chatbots to make them, but the output was junk. At that point, I’d taught human students how to draw them for years, and thought it’d be a neat exercise to get LLMs to draw them as well. The result was the first version of LLMapper. (Here are some of the concept maps it drew.)

Last month, Anthropic released a new functionality called Agent Skills. It’s a way to package particular abilities that can be used either by Claude on the web, the desktop app, or Claude Code. I thought it’d be neat to give Claude the ability to draw concept maps, so I used Claude Code to convert LLMapper to a Skill and gave it a bunch of new features along the way.

Download the LLMapper Skill from Github. The video above shows you how to install it using Claude on the web (using a paid account, alas.) I hope you find this Skill fun and useful!