Much of the talk around AI centers on solving big problems: writing software, self-driving vehicles, curing diseases, etc. These are exciting use cases. But there are also lots of small “paper cut” use cases where AI can help.

Some alleviate existing chores, but others make possible previously impractical tasks. They won’t eliminate any jobs: nobody would’ve been hired to do these things because their outcome isn’t worth the effort. But that doesn’t mean they aren’t valuable. They just haven’t been worth it — until now. Here’s an example.

I’ve consolidated most of my notes into Obsidian, which has become my “source of truth” for things like meeting minutes and book excerpts. That said, I still often write longhand, either with pen/paper or an iPad.

I suspect this is a common scenario. Lots of people live in both worlds. But they are different worlds: Obsidian notes are text files; handwritten notes are… something else. For our purposes, we’ll call them “analog” — but of course, that’s not correct: notes scribbled on an iPad are digital. Still, they’re not Markdown — and that’s what matters here.

For a long time, I created notes in Obsidian and dropped in the corresponding PDFs, adding a bit of metadata. These “wrapper” notes make the PDFs better Obsidian citizens. But Obsidian can’t reference the content inside the PDFs, since it’s not text. And, of course, we don’t get the Markdown text in the note’s body.

So here’s the use case: I want my analog notes in Obsidian as PDFs and Markdown. I’ve built a little script that does it automatically. Before I explain how it works, allow me a brief digression.

Why two note-taking systems?

You may be wondering, “If you want Obsidian to be the source of truth, why not type all your notes there and be done with it?”

That would indeed be easier. The problem is that different note-taking media support different cognitive abilities. When I type, I think “linearly.” That’s fine for essays and book notes, but not as good when I’m brainstorming or planning. I also pay attention better in meetings when I write longhand.

We think with things — and we think differently with different things. My mind works one way when I type and another when I scribble. Keeping the stylus or pen moving helps me focus. Over time, I’ve learned which modalities work best for me for different cognitive tasks in various contexts.

I’m leaning into these differences. So handwriting + digital text it is. (If you’d like to learn more about different note-taking modalities, check out my book Duly Noted.)

Bringing both together

Most note-taking apps allow you to export your handwriting as plain text. I could copy these transcriptions and paste them into Obsidian. But I’ve gotten mixed results with these transcriptions. The process is also tedious, since you must manually select each text to be transcribed.

Another downside is that this won’t work for paper-based notes. I use Scanner Pro, which does quick work of scanning notebooks and loose sheets, saves them as PDFs, and automatically uploads them to Dropbox.

Notability also backs up notes automatically as PDFs. Both these PDFs and Scanner Pro’s are automatically OCRed so they’re searchable, but the results aren’t as accurate as I’d like.

Using local AI to transcribe notes

This is a good use case for AIs. Models have long been capable of accurately transcribing handwriting. The problem is that my notes often include sensitive stuff: meeting minutes, bank account numbers, and other things I wouldn’t want leaked by accident. (I’m already wary of hosting them in Dropbox!)

What if the transcription happened locally? I knew there are solid locally-hosted models with vision capabilities, so I set out to experiment. I’ve landed on a setup that’s working exceedingly well. Before I describe it, I should note upfront that I’m on a 32GB M2 Max MacBook Pro. A smaller machine might not suffice.

Here are the components:

- Notability/Scanner Pro: source of the handwritten PDFs.

- Dropbox: syncs PDFs from both apps onto my Mac.

- Hazel: a Mac automation utility that performs predefined actions when things change in the filesystem.

- llm: Simon Willison’s tool for using LLMs in command line environments.

- pdftoppm: a command line tool that converts PDFs to bitmaps.

- Ollama: for hosting local models.

- mistral-small3.1: the “open weights” LLM that worked best at transcribing my handwriting.

I’m not going into how to set up these here; there are lots of resources for that. Here’s how the workflow works:

- Notability or Scanner Pro upload a new PDF to their respective directories on Dropbox.

- Hazel monitors both of these directories; when a new PDF appears in either, it copies the PDF to another directory called

~/Workflows/Handwritten Notes. (I won’t get into how that works; look up Hazel if you’re interested.) - When a new PDF shows up in

~/Workflows/Handwritten Notes, Hazel fires off this shell script:

# Convert each page in the PDF to a PNG and

# save it in a temp directory.

/opt/homebrew/bin/pdftoppm -png "$1" tmp/page

# Process each PNG image to transcribe it using the llm tool.

# Save the output to a temporary Markdown file.

for png_file in tmp/page-*.png; do

/Users/jarango/.venv/bin/llm -t pdf-scanner -a $png_file >> "$1.md"

done

# Copy the temp md file to my Obsidian Projects vault.

# Copy the PDF to the _assets directory in the vault.

filename=$(basename "$1")

filename_no_ext="${filename%.*}"

echo "![[$filename]]" >> "/Users/jarango/Notes/Projects/$filename_no_ext.md"

echo "\n\n" >> "/Users/jarango/Notes/Projects/$filename_no_ext.md"

cat "$1.md" >> "/Users/jarango/Notes/Projects/$filename_no_ext.md"

mv "$1" "/Users/jarango/Notes/Projects/_assets"

# Clean up the temp files.

rm "$1.md"

rm tmp/*

The rest of the steps are documented in the script itself. The gist is that the script converts the PDFs to PNGs, one per page. Then, it passes each page to an LLM for transcription. When it’s done, it saves both the transcript and the PDF to my Obsidian vault.

This is standard Unix shell scripting, so I won’t elaborate beyond the comments in the script itself. (Yes, I realize this could be more robust — that said, it’s worked fine for months.) I’ll just make two points about the stuff that might seem weird.

First, why convert the PDFs to images? For one, I’m using a model that doesn’t read PDFs natively. And while there’s a plugin for llm that allows it to read PDFs, it chokes with longer docs. Passing each page as a PNG is the most reliable approach I’ve found given my Mac’s constraints.

Second, you may be wondering about the line that calls llm. Where’s the prompt? The answer is, I’m using an homegrown llm template called pdf-scanner that includes the model definition, system prompt, and user prompt.

Templates are a great feature of llm that allows you to create reusable mini-“agents”. This is the one I’m using here:

model: mistral-small3.1

system: You are a text‑transcription assistant that extracts every written element from a supplied image, interprets handwritten content as accurately as possible, and renders any simple diagrams into Markdown‑compatible ASCII art

prompt: |

Analyze these handwritten notes. Don't include an introductory message — only the contents of the file.

### Core Rules / Guidelines

1. **Primary Task**

* Scan the entire image for all textual content.

* For each block of text, output the recognized string on its own line.

* If a diagram (lines, boxes, arrows, etc.) is present, translate it into ASCII art that preserves the layout and will render correctly in Markdown.

2. **Handwritten Interpretation**

* Treat ambiguous glyphs by considering the surrounding words and overall context.

* If multiple plausible interpretations exist, provide the most likely one and add a brief “(guess)” note if the confidence is low.

3. **Contextual Clues**

* Use any headings, dates, names, or repeated patterns to resolve uncertainty.

* If the image contains numeric values, check for typical formats (e.g., dates, times, prices) to aid decoding.

4. **Empty Image**

* If the image contains no visible text or diagram, respond **exactly** with:

```

empty image

```

5. **Output Formatting**

* Text lines are plain, one per line.

* ASCII diagrams are enclosed in triple backticks (```) to preserve formatting.

* Do **not** include any explanatory commentary, analysis, or metadata beyond the transcribed content.

6. **Avoid**

* Guessing without context (unless noted with “(guess)”).

* Adding unrelated text, emojis, or formatting beyond plain ASCII and Markdown code fences.

7. **Title and date**

* If the image incldues a title in the upper left corner, that becomes an h1 markdown title

* If the image includes an ISO 8601 date in the upper right corner, indicate that as the date immediately underneath the title (e.g., Date: 2025-09-30)

---

### Examples

| Image Type | LLM Output |

|------------|------------|

| **Handwritten note** – “Lunch at 12:30pm – 5th St.” | `Lunch at 12:30pm – 5th St.` |

| **Mixed text & diagram** – a simple flowchart: start → process → end | ``` <code>start<br>└─► process<br> │<br> └─► end</code> ``` |

| **Ambiguous word** – appears as “t0” but context is “tot‑o” | `tot-o (guess)` |

| **No content** | `empty image` |

---

### Output Format

Your response **must** contain only the transcribed text and/or ASCII diagram(s) in the order they appear in the image, with no additional explanation. Use Markdown code fences for ASCII art and plain text lines otherwise.

Example of final output structure:

```

First line of text

Second line of text

<ASCII diagram>

```

It’s worth noting that this template, too, was mostly written by an LLM; I have another template whose sole job is writing llm templates based on my brief prompts. (How meta is that?)

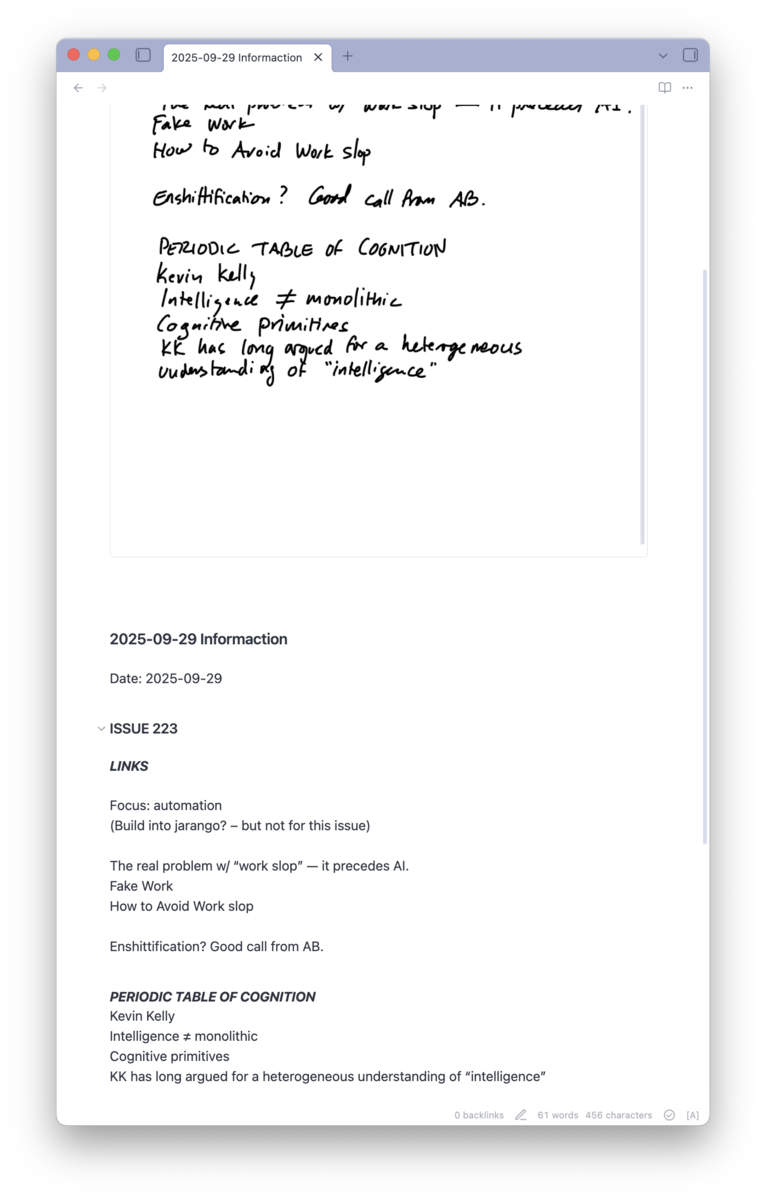

Here’s an example of the result: a Markdown-format note in Obsidian that includes the PDF and, below it, a transcription of the handwritten text in the PDF. (I realize this isn’t the best screenshot; I partially clipped the PDF to fit in the transcript.)

Is this an agent?

This combination of single-purpose old-school scripts that call LLMs is very powerful. Consider how many small chores in your life could use more automation than is afforded by old school deterministic programs!

Here’s another one: I’m working on another script that monitors a directory for scanned receipts. An LLM transcribes them, extracting key details. The script then adds them into a spreadsheet. This should save a lot of time come tax season! (Of course, I’ll manually review them before filing — I’m not delusional.)

There are lots of other low-impact chores such a system can alleviate. I’m gradually building a “team” of such mini-assistants. This begs the question: are these agents? These scripts bring to the table more smarts than traditional software, and they’re relieving drudgery. But is that enough?

Willison recently settled on a definition he likes:

An LLM agent runs tools in a loop to achieve a goal.

I like it too. But does it matter if it’s the LLM that’s using tools within the loop? Or is it still an agent if the LLM itself just one of the tools in a (traditionally-coded, deterministic) loop? Which is to say, perhaps there’s a valid inversion of the idea:

Tools calling LLMs in a loop to achieve a goal.

This better describes my little transcription script. But is it an agent? It’s definitely less sexy: this script doesn’t have the same autonomy as an LLM with a bunch of tools at its disposal. I also don’t think they fit Willison’s definition.

That said, even if we don’t think of them as agents, these simple single-purpose LLM-powered scripts could be among the tools called on by “real” agents. For example, if an agent determines it needs a transcript of a handwritten note, it could fire off a little LLM-powered script like mine.

An agentic ecosystem might include all sorts of different “species,” some with more leeway than others. I expect such a system would be easier to design, manage, and monitor than one composed solely of LLMs running tools — i.e., “real” agents.

Nailing it

There’s a lot of exploration to be done on both ends of the spectrum. But I’m especially excited by the possibilities afforded by this lower end. There are many chores that require more smarts than a simple shell script can deliver but not the greater complexity and cost of “real” agents.

I expect this to be an area of growth. Local models keep getting better and so does the deterministic side: for example, I understand Shortcuts in macOS Tahoe allows for monitoring directories. I’ll look into replacing Hazel once I upgrade.

Bottom line: combining LLMs with traditional deterministic programming creates new possibilities. They needn’t be big-time use cases: my humble transcriber is already useful. I’m now looking for other tasks to automate and capabilities to unlock with AI. Given this new hammer, I suddenly see nails everywhere.