The AI hype cycle may be cresting. At this point, many companies have developed AI-powered “solutions” aimed at bumping stock prices rather than serving customer needs. The results are disappointing. But if used well, AI could unlock tremendous value.

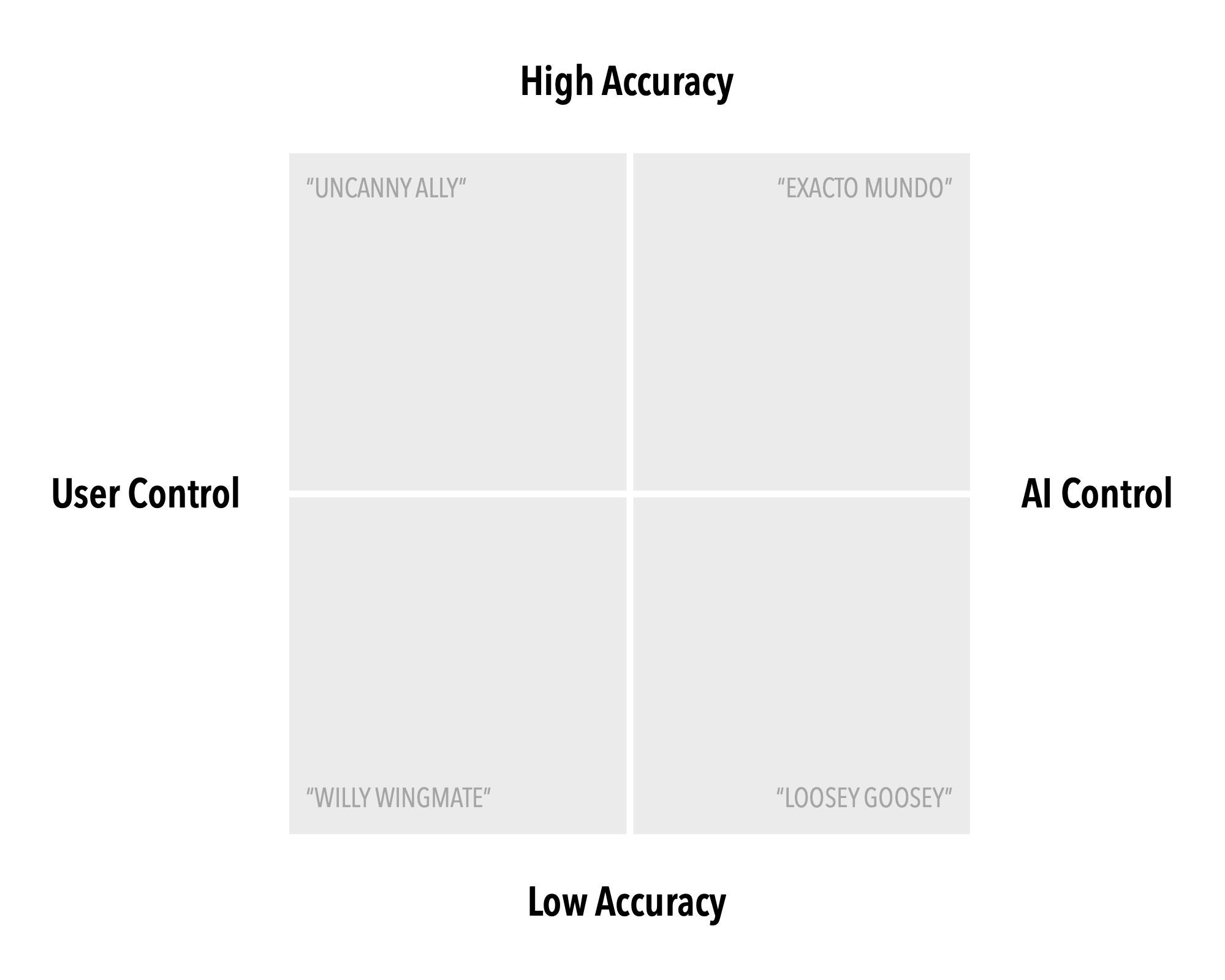

As I’ve been experimenting with AI, I’ve wondered what “used well” might look like. There are several scenarios, and they’re all different. My current model of the possibility space is a two-by-two matrix:

The vertical axis covers the level of accuracy required. The top end includes use cases that call for a high degree of accuracy. At the bottom, we have the ones where ‘good enough’ will do.

The horizontal axis covers the user’s degree of agency in the interaction. On the left, the user has full control over outcomes. On the right, the AI has full control.

Some use cases require lots of accuracy, but not all. And some lend themselves more naturally than others to keeping humans in the loop. Let’s look at a few examples.

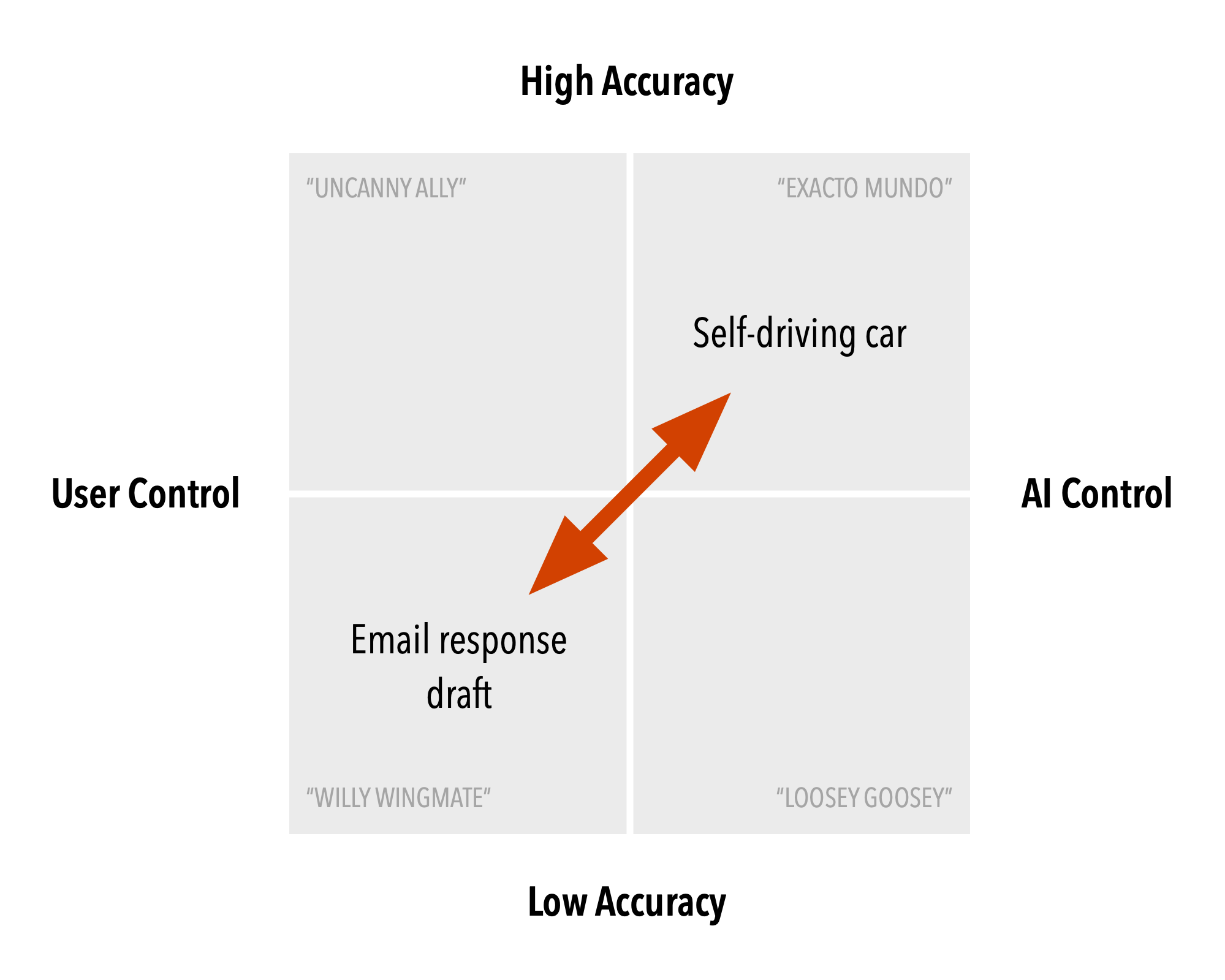

Consider an email client that uses AI to draft responses. This is a low-accuracy, user-directed use case: the user can edit the email before sending it or choose not to send it at all. As a result, it doesn’t matter much if the AI doesn’t get it perfect on the first go.

On the flip side, a self-driving car is a high-accuracy, AI-directed use case. There’s little margin for error: mistakes can gravely harm people and property. On top of it all, the passenger can’t do anything about it. (And of course, they’re among the people who can be harmed.)

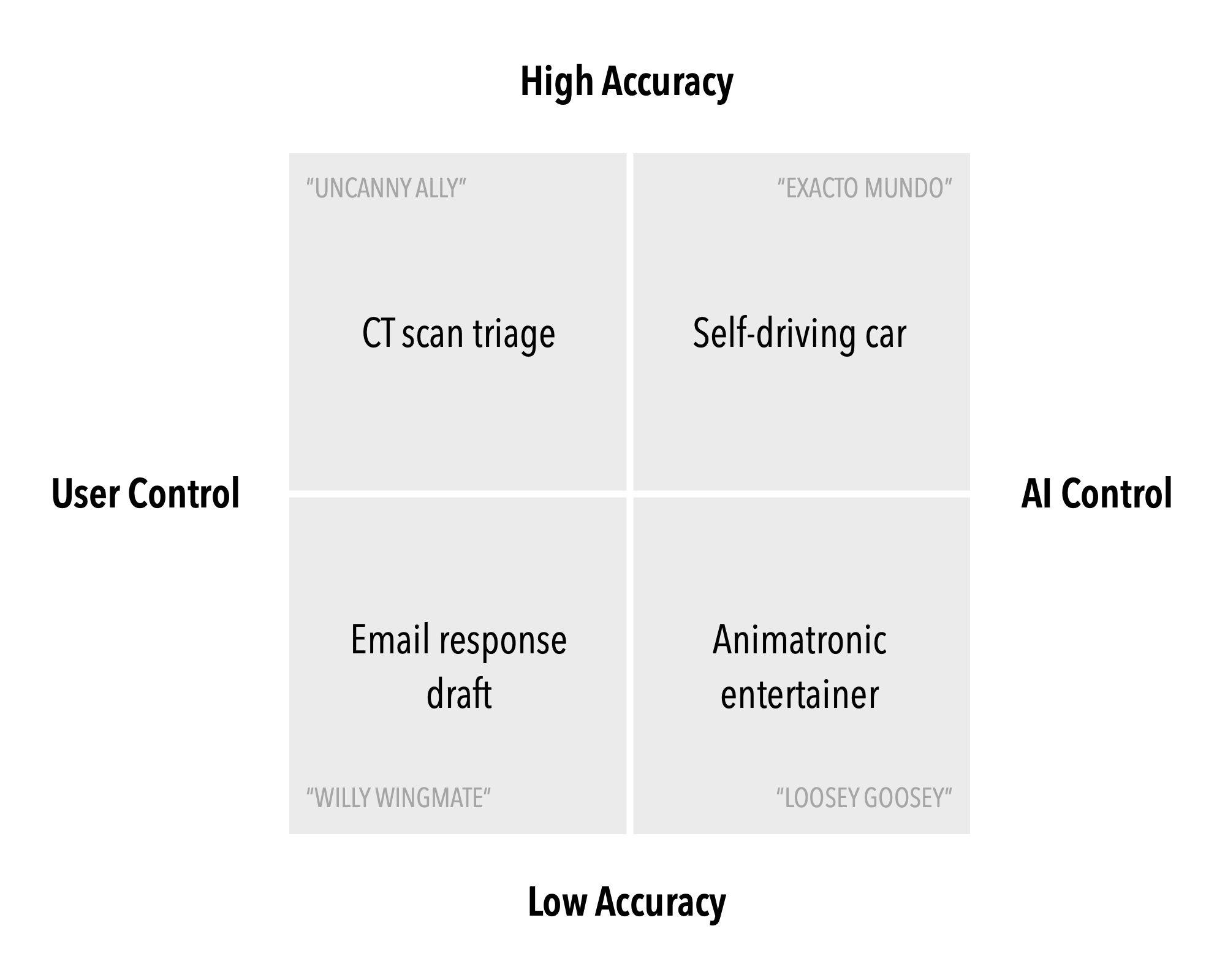

You might expect all use cases will correlate in these two quadrants, but they don’t. Some require user agency and high accuracy. Imagine using an AI to prioritize CT scans that show signs of tumors. In this scenario, you want as much accuracy as possible, but a radiologist makes the final call. The AI is merely used for triage.

The opposite quadrant includes cases where the user has little control but it doesn’t matter much if the AI gets it wrong. Consider a hypothetical theme park robot that compliments guests’ outfits. The guest can’t do much about it, but it’s not a big deal if the animatronic says the wrong thing.1

So far, I’ve called out specific applications: drafting emails, triaging CT scans, transporting passengers, and entertaining guests. These scenarios are easy to locate on the matrix, since they address particular uses. But what about broader systems, such as ChatGPT and Claude?

General-purpose AI chatbots can obviously serve both high-accuracy and low-accuracy use cases. For example, it won’t matter much if an LLM writes a bad limerick, but a bad contract could get a company in trouble if not vetted by a human lawyer.

And therein lies the rub: all interactions with general-purpose AIs fall on the “user-agency” half of the matrix. The AI-written contract might suck, but it’s up to the user to decide if a lawyer will review it.

There are obvious challenges here. For one, this is the first time we’ve interacted with systems that match our linguistic abilities while lacking other attributes of intelligence: consciousness, theory of mind, pride, shame, common sense, etc. AIs’ eloquence tricks us into accepting their output when we have no competence to do so.

The AI-written contract may be better than a human-written one. But can you trust it? After all, if you’re not a lawyer, you don’t know what you don’t know. And the fact that the AI contract looks so similar to a human one makes it easy for you to take its provenance for granted. That is, the better the outcome looks to your non-specialist eyes, the more likely you are to give up your agency.

Another challenge is that ChatGPT’s success has driven many people to equate AIs with chatbots. As a result, the current default approach to adding AI to products entails awkwardly grafting chat onto existing experiences, either for augmenting search (possibly good) or replacing human service agents (generally bad.)

But these ‘chatbot‘ scenarios only cover a portion of the possibility space — and not even the most interesting one. Current technologies struggle with accuracy. Techniques like graph RAG help, but for the most part we’re still mostly operating in the ‘Willy Wingman’ quadrant.

But as suggested above, the open-ended nature of chat UIs makes it difficult to set expectations about user agency and AI competency. Users think they’re interacting with an Uncanny Ally when they’re really dealing with a Willy Wingman — and they have no way to tell them apart.

So I’m skeptical when a product ‘adds AI’ by including a general-purpose chat feature. But it’s early days. As happens with many new technologies, there will be lots of highly visible failures. One can expect many orgs will decide AI was a mistake and pull back on their (ill-advised) investments.

Then, we can carry on exploring ways to use these amazing new technologies to solve real problems in ways that honor user needs and expectations. It can’t happen soon enough: AI has genuine potential to improve lots of things. We just have to be more thoughtful on how we go about it.

-

Or is it? What if it says something racist? The ‘AI-controlled’ end of the spectrum is tricky; I struggled to come up with good examples for this quadrant. ↩