There’s a lot to information architecture. Metadata, taxonomies, semantic structures, navigation schemes — the list goes on and on, should you want to go deep. To top it off, tech keeps changing things up. (AI is just the latest development to transform the discipline.) That said, beneath the complexity lie a few simple principles that stand the test of time.

I recently revisited the foreword Peter Morville wrote for Information Architecture: Designing Environments for Purpose (2004) in which he traces the history of the discipline. In that essay, he drew a distinction between two perspectives on IA:

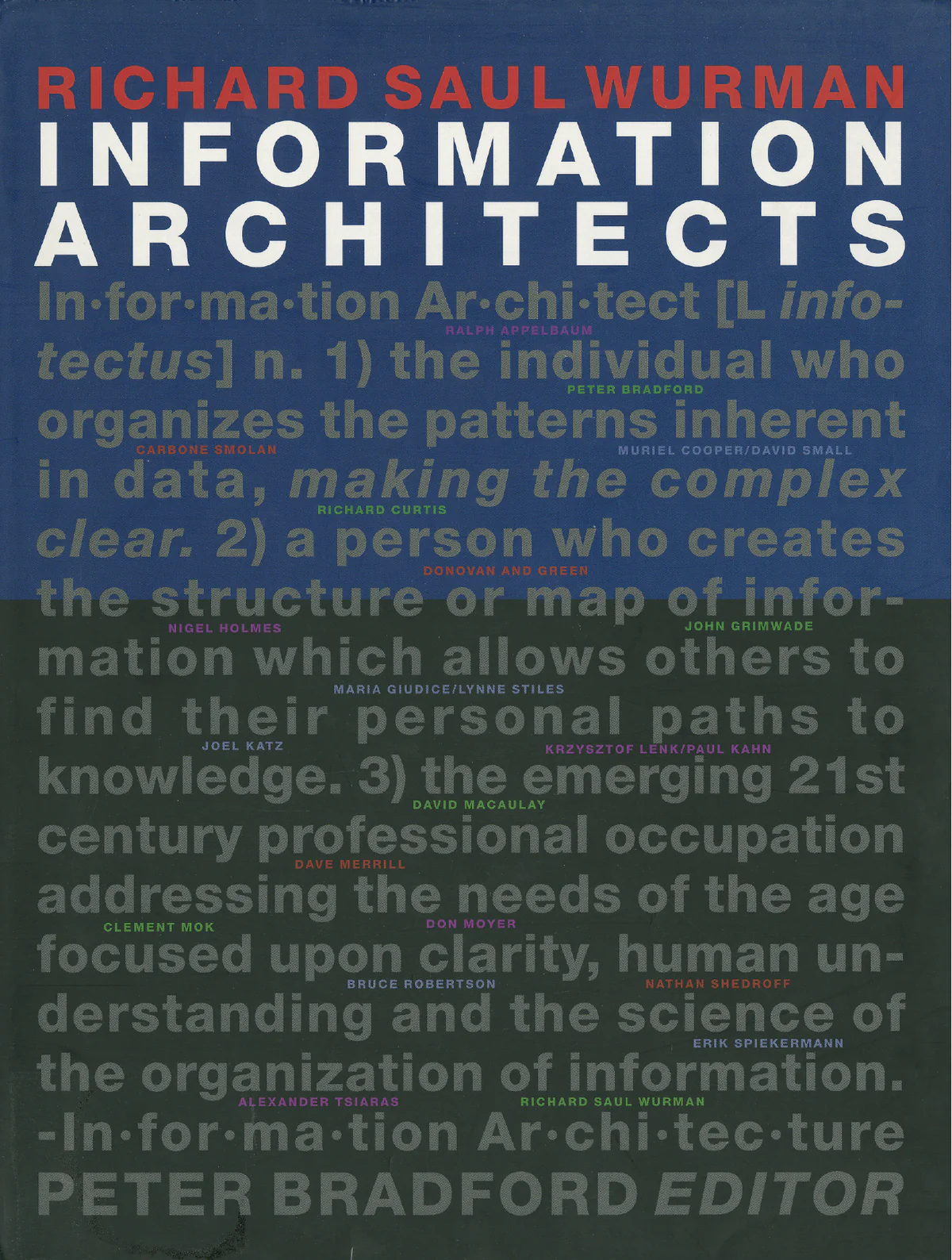

In 1996, a book titled Information Architects appeared in our offices. We learned that a fellow by the name of Richard Saul Wurman had coined the expression ‘information architect’ in 1975. After reading his book, I remember thinking ‘this is not information architecture, this is information design’.

And so, while some folks adhered to the Wurman definition, we became evangelists of the LIS (library and information science) school of information architecture. We argued passionately for the value of applying traditional LIS skills in the design of websites and intranets. We hired ‘information architects’ and taught them to practise the craft. We embraced other disciplines, integrating user research and usability engineering into our process. And, along the way, we built one of the world’s most admired information architecture firms.

I’m one of the folks who’s always adhered to Wurman’s take. I came to IA via his 1996 book; its brilliant three-part definition of IA (worn proudly on the book’s cover jacket) still informs my practice to this day.

But Morville and Rosenfeld’s book was also hugely influential to my practice. As suggested in the passage above, the examples in Wurman’s book mostly covered information design. The polar bear book focused IA on my medium, the web. I also had the privilege of working with Lou and Peter on the fourth edition. I obviously love this book.

That said, I’ve never bought into this distinction. As I see it, there isn’t a “Wurman IA” and a “polar bear IA” — there’s only IA. The final results may look different, but both approaches tap into the same principles. These IA “first principles” apply to structuring digital systems, but also to any other endeavor than aims for clarity.

Let’s unpack them.

People only understand things relative to things they already understand

This one comes from Wurman. The idea is simple: we can’t relate to concepts on their own. Instead, we scaffold understanding on sets of relations between ideas. ‘Darkness’ is meaningless except in relation to its opposite, ‘lightness.’ That duality is obvious: you learned it as a child. But consider a concept you learned recently. It’s likely you grokked it only by relating and contrasting it with other ideas you already knew.

For example, I remember when I first learned about ‘skeuomorphism.’ The concept and word seemed so alien! But the article where I first read it explained it using examples of mobile apps: some mimicked real-world materials (such as green felt) while others that used a ‘purer’ minimalist aesthetic. I’d used both types of apps. Contrasting them as opposite ends of a continuum gave me reference points for this new idea. The word became a useful signifier for an important design concept. But I only understood it because I already understood the aesthetics of app UIs.

This is how we learn: by relating new ideas to ideas we already understand. Mental models grow by accretion. There are no ‘intuitive’ information structures, only learnable ones; our job is building scaffoldings that allow people to find their way to knowledge.

People only understand things in context

This principle is related to the first. I understood it implicitly, but only came to fully appreciate it via Andrew Hinton’s wonderful book Understanding Context. The gist is that where we encounter concepts changes how we understand them. Consider the verb ‘save’: it means different things in a bank, a church, and a computer. Same word, completely different meanings depending on the context.

Architecture and art students learn about the difference between figure and ground. You might think you’re more interested in one (usually the figure,) but they don’t exist independently. The ground isn’t just a stage or background to the figure: it’s integral to what you experience as the ‘figure.’ The figure and ground are a single conceptual construct.

Same idea with language. People don’t experience terms in a navigation structure in isolation. Everything around them, including the site’s branding, section headings, and other terms in the navigation structure, create a context that changes how users interpret their meaning. The choices in the menu are experienced within a context — but they also create the context. Figure/ground, yin/yang, heads/tails – none exist as independent entities; they’re only understood in relation to a context they help create.

People rely on patterns and consistency

Have you ever been in a completely novel environment, one that felt utterly unfamiliar? Perhaps it was in a country with different customs where you didn’t speak the language. It’s disorienting! You might find yourself unable to make good decisions.

The brain is a pattern-matching organ. Skillful decision-making requires picking up recognizable and predictable patterns. It could be particular arrangements for street crossings or the strings of characters that form words and sentences. As you read this, your brain is filling in more gaps than you might ________. What word did you expect to come at the end of the previous sentence? Expect? Believe? In either case, I bet it wasn’t ‘pineapple.’ Or consider this sequence of characters:

WHT D Y THNK THS SNTNC MNS?

Your mind has redundancy mechanisms built in. You fill in the gaps – as long as you understand the elements that create the gaps. Environments that present consistent and recognizable patterns are easier to understand and navigate. They provide clues for your mind to fill in the rest.

People seek to minimize cognitive load

Implicit in these principles is the idea that functioning effectively in complex environments requires brainpower. Some thinking happens intentionally, with explicit effort (e.g., learning a new word, such as ’skeuomorphic’) while some happens behind the scenes. Why make any of it harder than it need be?

People prefer to interact with things they find easy to use — i.e., the ones that don’t require a lot of cognitive effort. This requires understanding both the system’s content and the mental models of people interacting with the system. Understanding both requires research. The touchstone here is Steve Krug’s book Don’t Make Me Think, whose title is a perfect encapsulation of this principle.

Thomas Jefferson said, “Take things always by their smooth handle.” As a designer, it’s up to you to design smooth handles. When it comes to information environments, this means creating labels and distinctions that don’t strain the user’s cognitive abilities more than necessary.

People have varying levels of expertise and familiarity

This principle is related to the previous one. Expertise isn’t evenly distributed. Some people know more than others about particular subject domains. On the flip side, some systems require more specialized knowledge than others. Here are a couple of examples.

I once helped design a system used for trading energy futures. The intended audience (energy traders) was highly specialized. These folks use particular jargon and need to see a lot of information at a glance. The resulting UI would seem cryptic to anybody but energy traders. On the opposite end, I once helped design a system whose intended audience was anybody living in the state of California. Some users might not even speak English. This called for a much broader, less specialized set of labels and semantic structures.

Again, do the research. Know who you’re designing for and what they need to accomplish. The system that might seem simple to you might be undecipherable to someone else. Conversely, you may find yourself inclined to over-simplify things that should be targeted more narrowly.

People are goal-oriented

As implied by the previous principle, people come to information systems because they’re trying to get something done. This doesn’t necessarily mean that everyone is consciously trying to solve for some specific task. For example, their unspoken goal might wasting time or being entertained. But unless they wandered in randomly, they’re likely there for a reason (or maybe several reasons.)

What is that? Do they want to pay their credit cards? Enroll their kids in school? Buy a new crochet set? Whatever it is, the system’s language and structures must make completing their intended tasks 1) possible and ideally 2) easy. (See the cognitive load principle above.) This entails using familiar language and distinctions.

People often don’t know what they’re looking for

That said, in some cases — in many cases! — they don’t know what they don’t know. And often, it’s stuff they need to know to successfully complete their tasks. More specifically, people often know the thing they’re looking for exists, but they don’t know what it’s called. As a result, they don’t know what to look for.

In The design of browsing and berrypicking techniques for the online search interface, Marcia Bates offered berrypicking as a metaphor for how people find information. Rather than go directly to the right search term, people meander through different systems picking up ‘berries’ (terms) that help them refine their search. In the process, they learn the language they need to be conversant in the space. They ask better questions, and consequently, make better searches. A virtuous cycle ensues.

This idea has a couple of important implications for design. First, the environment should include concepts users recognize as ‘berries.’ Second, (some) environments must provide different types of berries — different pathways — to accommodate users with different levels of expertise. (See the concept of progressive disclosure.)

Information is more useful when it’s actionable

The ultimate point of information is helping people act more skillfully. A “no pooping allowed” sign on a front lawn is information: it lets pet owners decide whether it’s okay to let their dogs go there (and thereby avoid an unpleasant confrontation.) Same with clear categories in an online store, or the options in a hospital website’s navigation menus.

Which is to say, IA doesn’t merely inform people in the abstract. The semantic structures users encounter aren’t merely “good to know”; they allow them to do things. So it helps to think of options and labels as having degrees of ‘actionability.’ Some are obviously actionable; others are head-scratchers. Does the user know what to do with a particular option? Do they understand how it’s relevant to what they’re trying to do? Is it in language they understand? Can they successfully predict the effects of choosing one option over another?

Choices should be clear and relevant and their effects predictable, to the degree that is possible. (While acknowledging some domains are inevitably complex and/or unfamiliar.) Clear options that are obviously relevant to the task at hand reduce cognitive load and enable people to get things done quickly and efficiently.

Toward a Different Framing for Design

You may have picked up that these principles all relate to and reinforce each other. They define a particular area of focus that makes information architecture unique among other design disciplines, independently of whether the final deliverable is a website, a diagram, or anything else.

In his 1996 book, Wurman defined an information architect as

- the individual who organizes the patterns inherent in data, making the complex clear.

- a person who creates the structure or map of information which allows others to find their personal path to knowledge.

- the emerging 21st century professional occupation addressing the needs of the age focused upon clarity, human understanding and the science of the organization of information

All three are as relevant today as they were twenty-eight years ago. A quarter into the 21st century, it’s fair to say the discipline hasn’t yet ‘emerged.’ But as information systems become more complex, it’s increasingly obvious a new approach is needed to designing and managing these things.

The dominant metaphor today casts them as ‘products.’ It’s useful insofar as it offers an understandable framing for business leaders, but it’s certainly not the only one possible. Some digital systems are more like places than like products. A bank’s mobile app isn’t a product in the sense that a checking account is a product. Instead, the app is the place where people purchase and use the company’s products, get help when needed, interact with other customers, and more — much like they did in physical branches. (See my book Living in Information for more on this line of thinking.)

Designing digital contexts that make it possible for people to get things done requires a discipline that combines aspects of architecture and information sciences. Information architecture is that discipline. By exploring its first principles, we can transcend the constraints of particular media to understand what is universal and unique about IA. This is my first pass at such a list; please reach out if there are other principles that should be included.